Friday 21 July 2006

WP1. Acquisition and modelling

WP1.1 Acquisition/Calibration of the practical setup

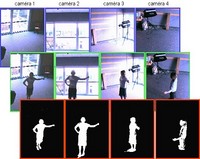

The set of cameras have to be calibrated and their relative position determined. This can be done off-line, but has to be flexible enough to allow a quick re-calibration if the setup has to be changed. Calibration is in principle a solved problem, but complete integration requires solving several practical and procedural issues.

WP1.2 Extraction of primitives from images

Geometric modelling is usually based on primitives such as corner points, segments, regions, silhouettes. Silhouettes are attractive because they convey precise global and local information on shapes. Their extraction is often based on an engineered setup (blue screen), which is not possible in our case, since cameras will be placed all around the scene. We will address their extraction using no other assumption on the scene’s background than that it is static. In addition, we want to accomplish this task for image sequences acquired by moving cameras.

WP1.3 Geometric Modelling

- Geometrical data. Silhouette-based modelling has limits though (e.g. concave surfaces can not be modelled). We thus will develop approaches that combine silhouettes with other, more local, information, such as corner points or directly texture information. An important issue that will be investigated is the representation of models (voxel-based, meshes of triangles, implicit surfaces, etc.).

- Articulated objects. Being able to model articulated motions, like that of a person, opens the way to important applications. For example, it can be the basis of a rich human-computer interaction. It also allows modelling and rendering parts of a person that are temporarily occluded in all cameras. Another usage is the production and transmission of light-weight geometric models (few parameters for the articulations versus many parameters for a generic model).

WP1.4 Photometric modelling and modelling of lighting

In order to produce a coherent appearance and to correctly simulate arbitrary lighting conditions for the augmented scene, the effects due to photometric properties of objects and the present lighting have to be separated. General approaches for doing this based on video sequences do not seem to exist. Often, the position of light sources is estimated based on simple assumptions on photometric properties of surfaces, and vice-versa. We want to improve upon this. A first, off-line approach, consists in taking images of an object with known photometric properties, placed in the scene, so as to measure the lighting (position and color). Another line of work will aim at developing on-line methods. We think about two different strategies:

- Ideally, one would wish to recover complete BRDF models for observed objects. This is not feasible from just a few video sequences under constant lighting. However, we will investigate what parts of the BRDF can be reasonably recovered under various assumptions.

- An alternative approach is to estimate coefficients of parametric reflection models, such as the Phong model. We would like to investigate different, more or less complex, models. The on-line choice of model may be addressed by model selection methods (information theoretic methods such as AIC (Akaike Information Criterion) or methods based on MDL (Minimum Description Length)).

- Based on a catalogue of BRDF models, for different materials that might be encountered, we aim at classifying the type of surfaces. Such a classification process may also result in a better spatial segmentation of surfaces of different types, compared to the above approaches.

-

WP1. Acquisition and modelling

21 July 2006